When Stefanie Tellex was 10 or 12, around 1990, she learned to program. Her great-aunt had given her instructional books and she would type code into her father’s desktop computer. One program she typed in was a famous artificial intelligence program called ELIZA, which aped a psychotherapist. Tellex would tap out questions, and ELIZA would respond with formulaic text answers. “I was just fascinated with the idea that a computer could talk to you,” Tellex says, “that a computer could be alive, like a person is alive.” Even ELIZA’s rote answers gave her a glimmer of what might be possible.

In college, Tellex worked on computational linguistics. For one project, she wrote an algorithm that answered questions about a block of text, replying to a question such as “Who shot Lincoln?” with “John Wilkes Booth.” But “I got really disillusioned with it,” Tellex says. “It basically boiled down to counting up how many words appeared with other words, and then trying to produce an answer based on statistics. It just felt like something was fundamentally missing in terms of what language is.”

For her PhD, Tellex worked with Deb Roy at the MIT Media Lab, who shared her disillusionment. He told her: “Yeah, what’s missing is perception and action. You have to be connected to the world,” Tellex says. Language is about something — an object, an event, an intent, all of which we learn through experience and interaction. So to master language, Roy was saying, computers might need such experience, too. “And that felt really right to me,” she says. “Plus, robots are awesome. So I basically became a roboticist.”

Tellex, who is now at Brown University, combines both her interests in “Robots That Use Language,” a survey that she and three colleagues published in the 2020 Annual Review of Control, Robotics, and Autonomous Systems. From a practical standpoint, language offers an intuitive way for users to guide machines in a host of applications, including home care, factories, surgical suites, search and rescue, construction, tutoring and autonomous vehicles.

“Language is the right interface when you have untrained users, under high cognitive load, interacting with a system that’s really complicated,” Tellex says. That’s especially true in situations such as elder care, adds coauthor Cynthia Matuszek, a roboticist at the University of Maryland, Baltimore County. Imagine an 80-year-old asking her home-care robot to cook something new for lunch: “I’m tired of tomato soup; make me a sandwich.” Well, Matuszek says, “if it’s like, ‘Okay, I’m gonna have to call a team of programmers for that,’ that’s not a useful robot.”

At the same time, however, Tellex and her coauthors emphasize that a robot’s ability to follow natural-language commands — “words to world,” we might call it — can reinforce its ability to understand those commands: world to words. Experience with sitting, for example — the kind of experience a disembodied Siri or Alexa doesn’t have — might help a robot identify and fetch a chair or some suitable alternative when it’s asked for one.

Being physically embedded in the world could also help robots deal with the fact that much of our language (and perhaps even our thought) is metaphorical. Understanding that a “big idea” really means “an important notion,” for example, may be enhanced by experience with physical size.

Real-world experience could even help robots deal with the notorious ambiguity of natural language, as well as its reliance on lots of background knowledge about the world and its assumption that the listener can infer a speaker’s intent. “I’m hungry” might really mean “Make me a sandwich,” which in turn requires an understanding of sandwiches — what they’re for and how to make one. Bodies might give computers common sense, or at least a better semblance, addressing one critique of large language models made in “On the Dangers of Stochastic Parrots,” an under-review paper that has been linked to the computer scientist Timnit Gebru’s recent dramatic departure from Google: Such word-counting algorithms sound coherent while lacking comprehension.

In short, Matuszek says, combining robotics and language complicates both, but they have the potential to bolster each other.

In practice, of course, learning language isn’t nearly as easy as it looks, real-world experience or not. But in the past decade or so, there has been increased progress in the robots-and-language realm. This is thanks to the development of “deep-learning” systems that operate in a way reminiscent of the brain, and that can map rich patterns of speech and text to rich patterns of perception and action. “I think we’ll see it in our lifetimes,” Matuszek says. “If you’re like, ‘Get the thus and such,’ that will be pretty near term. ‘Get me my favorite mug and serve dinner’ — harder, right? It’s a field, and fields make progress in fits and starts.”

Some robots can learn new things to better understand what a person says to them. This robot didn’t understand the word “rattling” after being given a command, so it asks the researcher Jesse Thomason to demonstrate objects that rattle.

CREDIT: NICK WALKER

From here to there

When might you want to speak to a robot? One likely situation would be when you tell it to go somewhere to do something for you, like fetch you a drink from the kitchen. So researchers have thought hard about combining language with navigation.

One well-studied task of this type is Room-to-Room (R2R), introduced in 2018. A database contains dozens of virtual building interiors with photo-realistic graphics, a set of pathways through the rooms and human-generated descriptions of those trajectories. Virtual environments like this are common in robotics research. The aim is to develop algorithms that work in simulation — where tasks can be completed faster than in real life, and expensive robots don’t experience wear and tear — and then transfer them to the real world. In the R2R case, the task is for a virtual robot to follow directions such as, “Head upstairs and walk past the piano through an archway directly in front. Turn right when the hallway ends at pictures and table. Wait by the moose antlers hanging on the wall.”

The paper that introduced the R2R task also presented a simple machine-learning algorithm to tackle it. Implementing a “sequence-to-sequence” architecture, this system takes in a sequence of words and outputs a sequence of action commands, rather like translating from one language to another. In between is a neural network, an arrangement of simple computing elements roughly mimicking the brain’s wiring. The network has sub-networks specialized for handling language (the instructions) and images (what the virtual robot sees). When it succeeds during training, the active neural connections increase in strength.

After training, the sequence-to-sequence model in the paper succeeded about 20 percent of the time. But since then, other researchers working on R2R have refined the methods and achieved success rates of over 70 percent. One system even accelerates its learning by attempting auxiliary tasks like explaining its actions (“I step in the door and go forward”) or estimating its progress (“I have completed 50 percent”).

Some researchers also work on navigation with physical robots. At a conference this year, for example, Cornell University computer scientist Yoav Artzi and his colleagues reported success at translating from natural-language instructions to navigation in a physical quadcopter. The quadcopter flew over an area (about five meters square) scattered with various objects. Its control software learned, with a combination of simulated and real data, to follow directions like this one: “After the blue bale take a right towards the small white bush, before the white bush take a right and head towards the right side of the banana.” It received top marks from human judges about 40 percent of the time. That’s well above the 15 percent success rate of a system that just takes average actions, but leaves plenty of room for progress, indicating the difficulty of the task.

Now what?

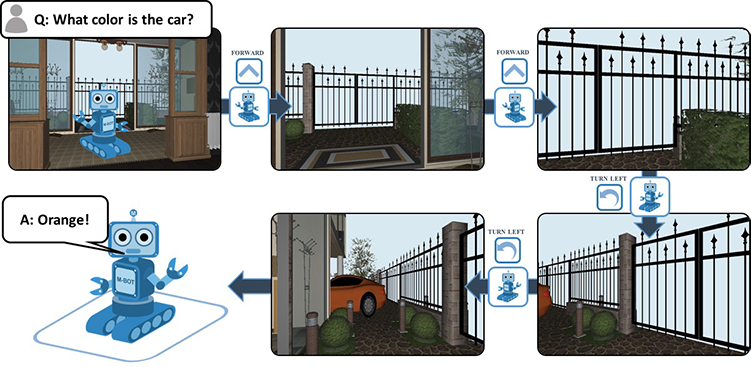

We want to be able to tell robots not only to navigate to places, but also to do things once they get there. Your sandwich-fetching robot needs to understand not only “Go to the kitchen,” but also “Make a ham-on-rye.” Or at least to answer questions about what it sees when it gets there, such as “Are we out of bread?” In 2018, researchers at Georgia Tech and Facebook created a task (and a dataset) called embodied question answering (EQA). A virtual robot navigates virtual homes, answering questions like “What color is the car?” It must reason that the car is in the garage, go there, look at it and answer, using an algorithm that combines components for vision, question processing, navigation and answer producing.

Working with the same virtual environment, other researchers followed up with a harder task, the multi-target EQA, in which the virtual robot must answer questions like “Does the dressing table in the bedroom have the same color as the sink in the bathroom?” It was about 60 percent accurate.

In the Embodied Question Answering task, a virtual robot needs to navigate its virtual environment to find the answers to researchers’ questions.

CREDIT: A. DAS ET AL / PROCEEDINGS OF THE IEEE CONFERENCE ON COMPUTER VISION AND PATTERN RECOGNITION (CVPR) 2018

Recently, researchers published a virtual robot manipulation task called ALFRED, consisting of many domestic scenes filled with objects. Commands include things like “Put a hot potato slice on the counter,” which requires figuring out that it needs to heat a potato, slice it and place the slice on the counter. Their neural network was successful less than 1 percent of the time. To do better, the researchers may need to supplement the deep-learning neural network with systems of handwritten rules — an older approach that’s often called good old-fashioned AI (GOFAI). Some researchers, in fact, argue that we will need hybrid GOFAI–deep-learning systems for the foreseeable future.

Perhaps the biggest single limitation of deep learning is its reliance on big data. Children can sometimes learn from one example, whereas artificial neural networks can require training on thousands or millions. Google has sought a way to reduce deep learning’s need for data, with an algorithm called “language-conditioned learning from play.” A person controls a multi-jointed robot arm in a virtual space called 3D Playroom, containing a desk with a door, a drawer, three buttons, a block and a trash bin. The person just plays around, and the computer learns in part by imitating the humans.

One of Tellex’s favorite projects was a forklift she worked on as a postdoc at MIT that used GOFAI to follow commands like “Put the pallet of pipes on the truck.” “What I loved about that project,” she says, “was that it was a real application where there was a robot that was doing real stuff in the environment.”

In the 3D Playroom virtual environment, a robot learns to follow instructions and manipulate objects.

CREDIT: COREY LYNCH AND PIERRE SERMANET

Tell me more

We want robots to do more than follow instructions. We want to converse and collaborate with them. That’s not always easy, says Jesse Thomason, a computer scientist who next year will join the University of Southern California. What people actually say is often highly ambiguous, if not outright gibberish. “We can point to another person,” he says, “and be like, ‘Hey, can you help me with that thing I said earlier?’ And they will understand exactly what that means.” Frequently, people don’t even speak in complete sentences or with perfect grammar.

To tackle that problem, Thomason and collaborators trained a virtual robot to ask questions in response to instructions, simultaneously improving its ability to parse sentences and perceive the world. They then implanted that intelligence in a physical robot, an arm on a Segway that roamed around a set of offices. In one instance, a person told the robot, “Move a rattling container from the lounge by the conference room to Bob’s office.” It didn’t know what “rattling” meant, so it asked for a few examples of things that do and don’t rattle. The person shook a pill jar, a container of beans and a water bottle. The robot then went and found the item, delivering it successfully.

Baby talk, baby steps

“Overall, natural language control of robotic agents is largely an open problem,” Artzi says. “I can’t say there are impressive working examples. Maybe impressive from a research standpoint, but far from reaching the vision we have in mind.”

Still, datasets and algorithms and computation are all improving. Plus, Thomason notes, simulated environments for experimentation are becoming more realistic. And at the same time autonomous robots are getting cheaper and widening their commercial presence through companies like Skydio (aerial drones) and Waymo (self-driving cars). We’ve come a long way from the forklift Tellex labored on a decade ago. As she says, “I see the frontiers for language and robots opening up.”